LLMs, Longform, and Low-Code: What Worked (and Broke) in Real-World Tests

LLMs aid with tight, scoped tasks: cut redundancy, multipass feedback, summary maps, brainstorming. But they struggle with long inputs, continuity and consistent voice. Useful for scaffolding and agents, not autopilot. Keep humans on structure, logic and style. Use models to spot patterns.

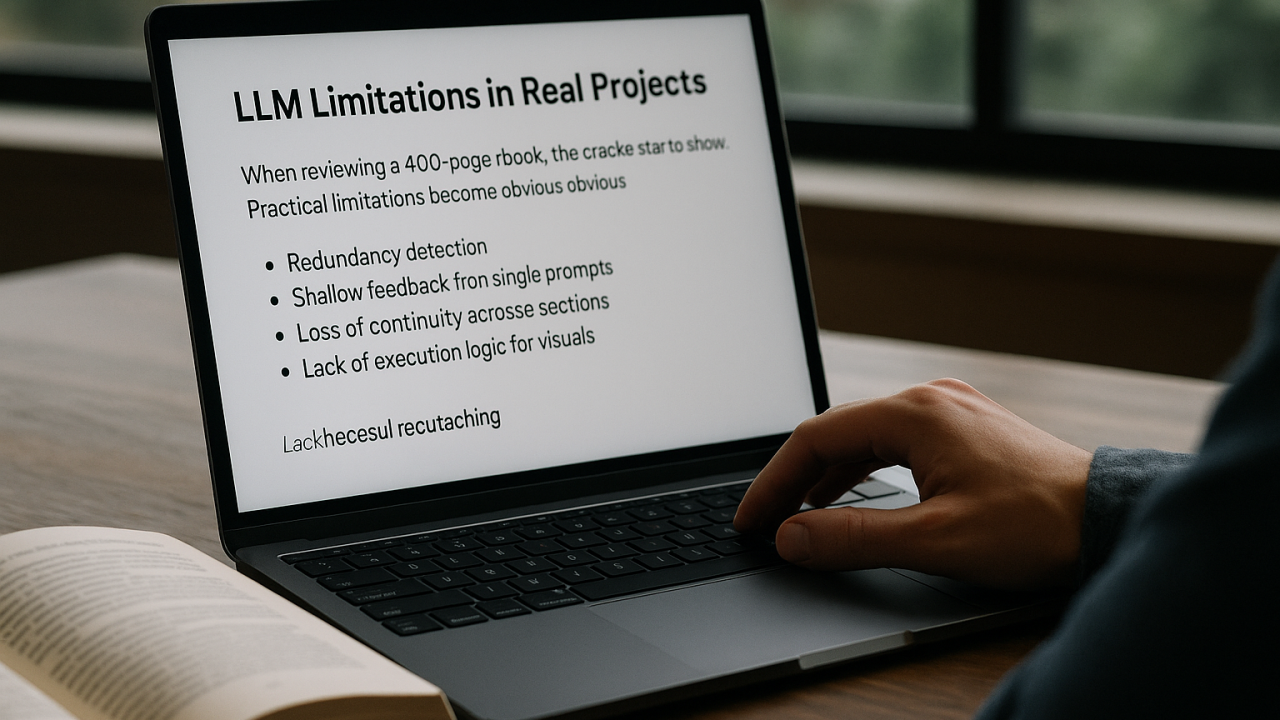

We talk a lot about what LLMs can do. But when you're deep into a real project — like reviewing a 400-page book or building tools around it — the cracks start to show. That’s when the practical limitations (and the underlying reasons) become obvious.

I tested ChatGPT, Claude, Gemini and other tools like Base44, Gamma and others across demanding workflows. I didn’t use them to write the book — but to analyze it, cut bloat, test structure, and brainstorm visuals. Think of them as a tireless (if flawed) developmental editor’s assistant. I also experimented with AI coding platforms to build companion tools for faster delivery.

Some results were genuinely helpful. Others failed quietly — or loudly. Here’s what worked, what broke, and why.